Astibuag - https://stock.adobe.com

Coveted Raw Material: Data for Cutting-Edge Research

Technology giants zealously guard their data records – a policy with strict laws that slows down data transfer. So how does research on AI in Germany organize its basic supply of data?

Since computers and their algorithms became strong enough to recognize recurring patterns in large amounts of data, it is impossible to imagine science fields like biomedicine, quantum physics, computer science and climate research without methods of artificial intelligence (AI). They are also finding application in other disciplines: From the social sciences and psychology to criminology and art history.

But data is a jealously guarded raw material: In order to train the algorithms for their later tasks, one needs data in large supply, good quality, and suitable format. It must also be available at the touch of a button and frequently updated, because although data do not disappear, they do become obsolete. All this makes data a bottleneck in data-driven research. Ensuring their availability presents different scientific fields with very different challenges.

Secure access to data in Europe – but how?

Andreas Hotho is Professor of Data Science at the University of Würzburg. He is investigating how machine learning can be used to evaluate large amounts of data and how the algorithms trained with it can later be used in very different areas. Whether for monitoring climate data, in recommendation systems or for analyzing social media: Hotho needs large amounts of training data for his research. For example, Hotho says "GTP-2", a system that can formulate texts further once you give it a start, took the equivalent of 20 million pages of pure text to “learn” patterns of language processing. It is difficult to organize such a 40 gigabyte package with precisely fitting data for individual research.

In the age of Big Data, access to large amounts of data is becoming increasingly important for science.

Whoever controls the search engines controls access to the world's information.

Things would be much easier if the big players in commercial data such as Google or Facebook made data available to public research. But because data is at the core of their business models, they have no interest in collaborating. "When Facebook publishes a study, of course, the company can virtually claim what it likes. It would be more valid if one were able to verify the information. But you can't do that because you can't access the data," Hotho complains. And in view of the dominant market position of US companies, he is concerned that Germany could one day be completely cut off from the data supply: "In order to access the knowledge collected on the Internet, we need search engines. Those who control the search engines control access to the world’s information. In Germany we don't have our own search engine. What we need to secure access to data is our own copy of the Internet. Although such a copy would be feasible, so far nothing is being done." For Hotho, this is a European project that has yet to be tackled.

Research data management - (also) a question of money

In basic research, data-driven research presents scientists with other challenges: Patrick Cramer is Director at the Max Planck Institute for Biophysical Chemistry in Göttingen and Head of the Department of Molecular Biology. He uses electron microscopy and X-ray crystallography to investigate the transcription and regulation of genetic material. "The original images that we collect in our research have a very low contrast. Recently, however, we succeeded in using machine learning to increase the contrast between the actual protein particles and the noisy background data in such a way that the particles can be easily identified." This represents a quality leap in analysis.

In Cramer's research community, it is common practice to make research data available in databases at the same time as publications. "It’s a pity, however, that in our field there is no real obligation to make the raw data accessible as well. This way you lose the possibility to reprocess the data once you have created better algorithms," explains Cramer. However, raw data is often very extensive, and this presents a challenge for the infrastructure in individual laboratories. "And not everyone wants to release their raw data because they hope to harvest more fruit from them at a later date."

Science policy makers are well aware of the fact that there is an acute need for action to supply research with data. The Federal Ministry of Education and Research is currently funding 21 research data management projects. At the same time, subject-specific platforms and formats are being developed. "There are now ongoing efforts worldwide to fill the databases," says Patrick Cramer. "That's why it would be a pity if there were now parallel efforts in Germany." It would make more sense to strengthen existing structures financially.

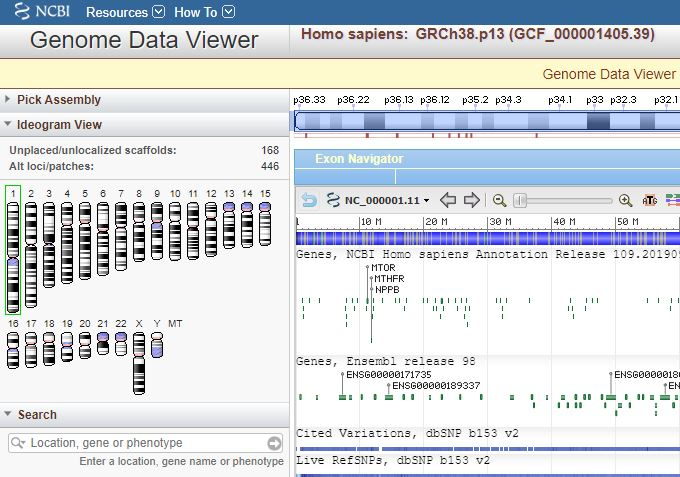

The National Center for Biotechnology Information in Bethesda, Maryland (NCBI) provides access to important DNA, RNA and protein databases.

In basic research, which must of course first produce its own data, the availability of data is above all a question of money. In medical research, where data-driven processes offer especially promising new opportunities, another problem is that of data protection. "Patient data is particularly well protected, and that is a good thing. On the other hand, it cannot be denied that this presents a barrier to research from progressing as it should," says Cramer.

Voluntary data donations – seals of approval or trustee concepts secure standards

New formats are under discussion with a view to harmonizing the interests of data protection and research. In the case of a "data donation", for example, when visiting the doctor the patient could determine which data may be passed on for research purposes. In this context, the German Ethics Council has proposed a "seal of quality" for anonymized data.

The biggest challenge is to take into account the different data protection requirements.

Another model is based on the "trustee" concept. Dana Stahl heads the Independent Trust Office of the Greifswald University Hospital. In the six years of its existence, it has collected almost 10 million pseudonymized data records, including the consent forms of the study participants, who are often patients. Researchers can apply for access to this data via a transfer office.

"The greatest challenge," says Stahl, "is to take into account all the various data protection requirements and to integrate them into a software solution in such a way that makes it user friendly – and that it will remain so in the future." In order to meet this requirement, the medical IT specialists of the trustee agency are in talks with data protection officers and ethics committees. "Little by little," says Stahl, "common standards are being established with other data centers, especially at state and federal levels in the frame of the Medical Informatics Initiative." The aim is to merge data on rare diseases or the results of cardiological studies across locations with those on dementia research– always on the basis of the informed consent of the people concerned.

The independent Trustee Office of the Greifswald University Hospital supports technical infrastructures for medical research.

Legal challenges for "AI Made in Germany"

Is there now sufficient data for public research in Germany or not? "It’s not a matter of too little data," replies Steffen Augsberg, Professor of Public Law at the University of Giessen and member of the German Ethics Council, "but there are technical and legal difficulties in bringing them together. He also sees the greatest problem in the diversity of regulations. Internationally, the problem becomes even bigger: "The General Data Protection Regulation has brought a degree of standardization for EU member states. At the same time, however, it has been the starting signal for many states to issue their own regulations." Anyone working in cross-border projects may therefore have to take even more regulations into account.

According to Augsberg, court proceedings have already been initiated in order to clarify legal uncertainties with regard to the various data protection regulations. From Augsberg's perspective, even the current practice of viewing data as property items which, like other possessions, can be kept under lock and key is to be critically questioned: "As yet, we have no legally convincing answer to this challenge either."

So it may be that data protection is slowing down AI-supported research in Germany. On the other hand, in the orientation towards basic values and the protection of privacy, the Federal Government sees this as a possible trademark for "AI Made in Germany". No algorithm is able to predict whether this will be the case.

On the author

Dr. Manuela Lenzen holds a doctorate in philosophy and writes as a freelance science journalist on the topics of digitalization, artificial intelligence and cognition research, among others for FAZ, NZZ, Psychologie Heute, Bild der Wissenschaft and Gehirn und Geist. Her current book "Künstliche Intelligenz - Was sie kann und was uns erwartet" (only available in German, translation "AI – What it can do and what awaits us") (C.H. Beck, Munich, 2018) has received many positive reviews as an objective reference work.

Manuela Lenzen