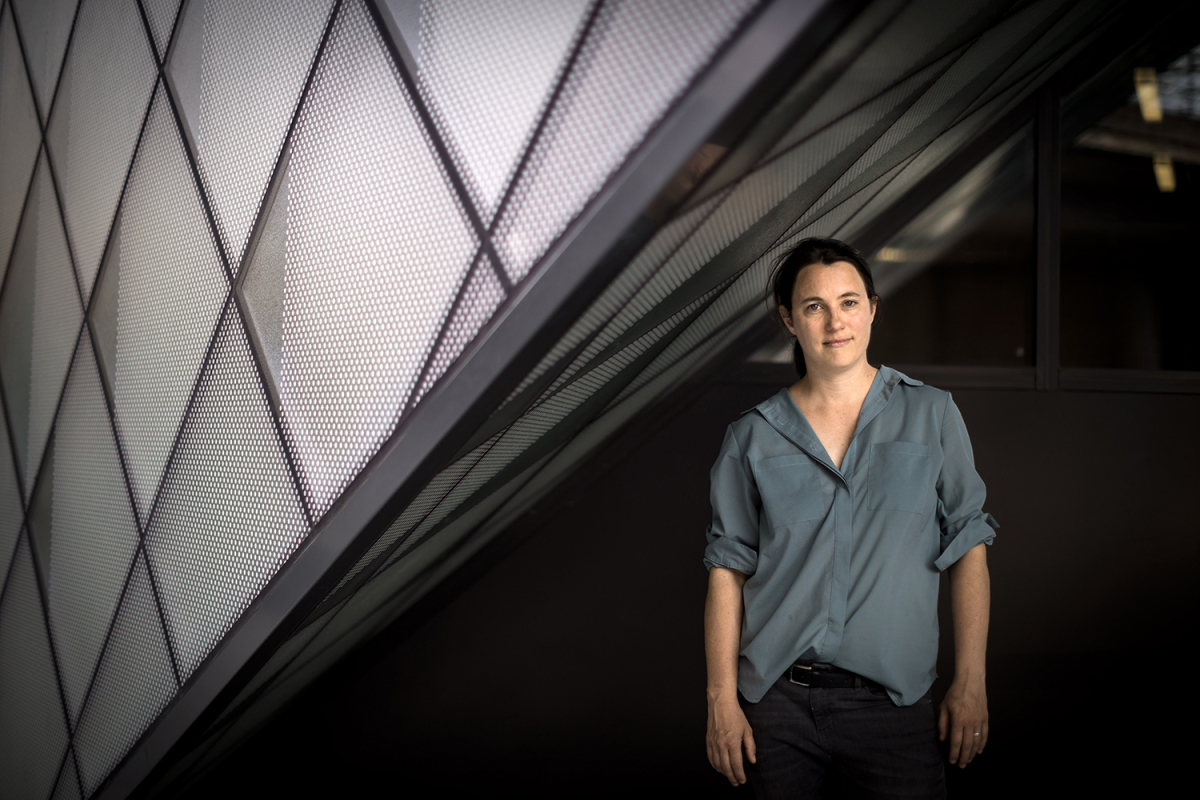

Gordon Welters

When does artificial intelligence become political?

Sabine Müller-Mall views AI from the perspective of a scholar of law and constitutional theory. In her opinion, we should take a closer look at the underlying algorithms, since they exert a normative effect and influence how we perceive things, communicate and act.

How do you approach the topic of AI?

Müller-Mall: I'm interested in the fundamental changes being brought about by AI. How do we perceive these changes – some people talk about a revolution – and how can we deal with them? And above all: How do we want to organize and shape the relationships between people under these new conditions? So the fundamental question of the political arises.

Aren't algorithms themselves neutral and thus not politicized?

No, the algorithms behind most AI technologies are neither neutral nor objective. They are based on assumptions with inherent evaluations: For instance, a tweet that provokes a lot of responses is seen to be more relevant than others. Another example is credit scoring – the assessment of a person's creditworthiness is related more to their social environment, their place of residence, than to their individual life situation.

By processing such assumptions and inscribing them into social contexts through their applications, algorithms themselves exert a normative effect. AI technologies not only make suggestions on how we could organize interpersonal relationships, but also help to shape them and incorporate their assessments as a normative foundation for our actions.

At the same time, they remain inaccessible and unreadable for most of us. This in turn triggers a feeling of insecurity, whether in dealing with language assistants or the social media. My approach is to put the perspective not on the consequences, but earlier on, that is before their application. That means looking at the programming of AI technologies themselves: What logical models are in play here, what assumptions lie behind them and inscribe themselves into our social world?

Sabine Müller-Mall holds a professorship for legal and constitutional theory with interdisciplinary references at the TU Dresden. The photos were taken in Berlin.

"Codification 3.0 - The Constitution of Artificial Intelligence" is the title of your project. With "constitution" one associates laws. Are algorithms like laws in the world of AI?

Müller-Mall: Algorithms are of course not laws in the legal sense. But like laws, they control our behavior; they influence the way we perceive and evaluate the world. They are written in formalized language and permeate all areas of life. Algorithms, unlike laws, do not claim to be universally valid. But because they are inscribed everywhere in our actions, our perception and our communication, their shared basic assumption that decisions and actions are calculable and translatable into sequences of steps gains something like universality.

Many algorithms are aimed directly at guiding our behavior – for example, in the case of online shopping portals that literally "stalk" us with offers. How is that to be assessed?

Müller-Mall: As I said, behavioral control is often also the goal of legal laws – but the respective assumptions differ fundamentally: Liberal constitutional systems are based on a strong understanding of autonomy – that all people can act freely and change their opinion and behavior at any time, regardless of whether there actually is such a thing as "free" will. Algorithms, on the other hand, are often based on observable laws of human action; they learn to recognize behavioral patterns, for example. In the case of algorithms, behavioral control focuses on the probable actions of people, not the unexpected or new. It normalizes action, perception and communication. In this respect, one could say that algorithmic normalization becomes a value and thus enters into a kind of normative competition with our own constitutionalized understanding of autonomy.

Is this also the political dimension of algorithms?

Exactly. When probabilities and regularities, on the one hand, and calculation as a principle of world interpretation on the other hand dominate the social, there is a shift in the spaces of the political, the possibilities of freedom and the notion of equality. In this respect, the algorithmization of everyday life does not simply mean making technological progress, but also that we redefine ourselves politically in a sense that needs to be explored.

What else fundamentally distinguishes algorithms from laws – to stick to the comparison?

Müller-Mall: The development of algorithms does not go through the same process as laws, which is a political process of creation and largely transparent and legally structured – and one in which we are also involved. We can discuss the underlying assumptions and goals politically and also influence them. Algorithms, on the other hand, are usually developed by private providers and are not transparent for others.

Finally, another difference lies in the way they are applied: laws are applied by courts, by judges who can also weigh up the merits of individual cases for the sake of fairness, and who, in addition to their formal expertise, bring life experience and empathy to the table. Because AI technologies apply algorithms mechanically, this level is missing, which distinguishes judgment from the mere decision.

So algorithms always decide "according to the rule"?

Müller-Mall: Not quite. Many algorithms are highly complex and actually capable of learning. But they follow a rational structure and are typically fed with collective data sets, which are then often statistically evaluated for individual decisions. An example would be the scoring method used by credit reporting agencies: If I live in an area of the city where, according to the data, the probability that loans will be repaid is very high, I have a better chance of getting a loan than if I live in a socially deprived neighborhood. With the help of AI, the credit reporting agency assigns me to a certain group, classifies me – I am perceived as an object and not as an individual. This also applies to the "predictive policing" used in some countries, which evaluates case data to calculate the probability of future crimes and uses it, for example, for sentence review.

So how should we as responsible citizens deal with artificial intelligences?

Müller-Mall: By basically talking about which tasks we want to transfer to AI – or not – and if so, in what way: And also by dealing with the political dimensions of AI politically. I personally think cultural pessimism and strict regulations are the wrong way.